VIDEOSGZERO World with Ian BremmerQuick TakePUPPET REGIMEIan ExplainsGZERO ReportsAsk IanGlobal Stage

Site Navigation

Search

Human content,

AI powered search.

Latest Stories

Start your day right!

Get latest updates and insights delivered to your inbox.

GZERO AI

The latest on artificial intelligence and its implications - from the GZERO AI newsletter.

Presented by

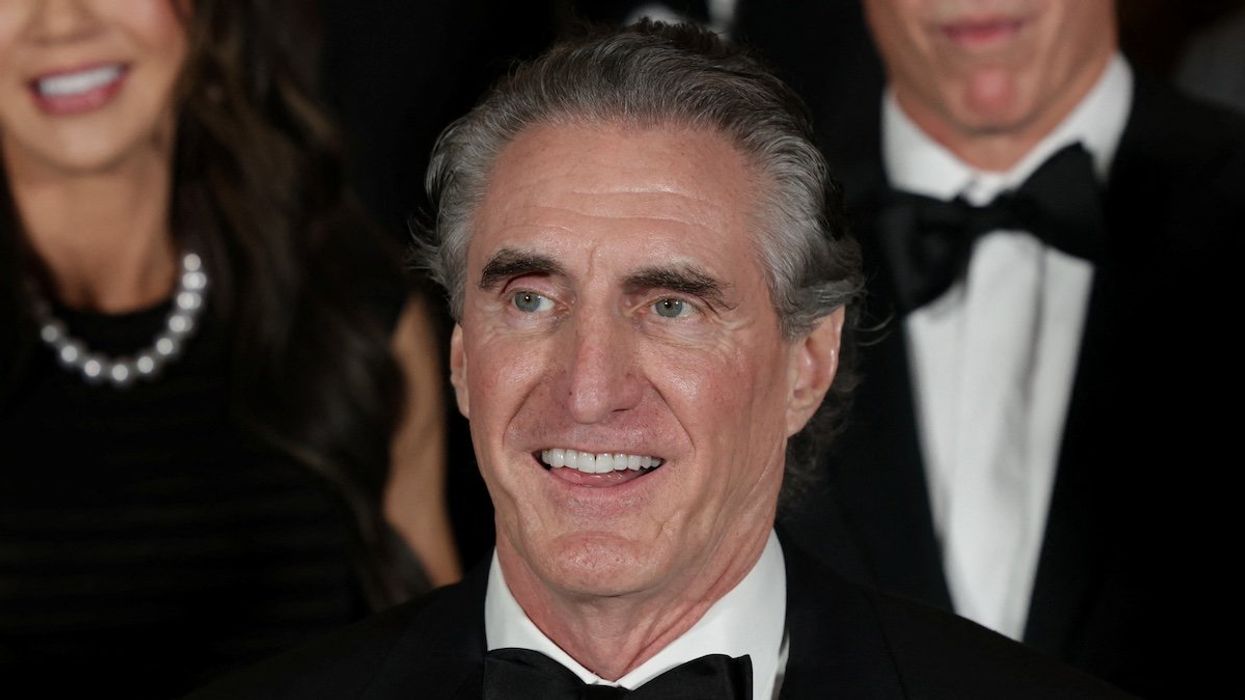

Taylor Owen, professor at the Max Bell School of Public Policy at McGill University and director of its Centre for Media, Technology & Democracy, co-hosts GZERO AI, our new weekly video series intended to help you keep up and make sense of the latest news on the AI revolution. In this episode of the series, Taylor Owen takes a look at the OpenAI-Sam Altman drama.

Hi, I'm Taylor Owen. This is GZERO AI. So if you're watching this video, then like me, you're probably glued to your screen over the past week, watching the psychodrama play out at OpenAI, a company literally at the center of the current AI moment we're in.

Sam Altman, the CEO of OpenAI, was kicked out of his company by his own board of directors. Under a week later, he was back as CEO, and all but one of those board members was gone. All of this would be amusing, and it certainly was in a glib sort of way, if the consequences weren't so profound. I've been thinking a lot about how to make sense of all this, and I keep coming back to this profound sense of deja vu.

First, though, a quick recap. We don't know all of the details, but it really does seem to be the case that at the core of this conflict was a tension between two different views of what OpenAI was and will be in the future. Remember, OpenAI was founded in 2015 as a nonprofit, and a nonprofit because it was choosing a mission of building technologies to benefit all of humanity over a private corporate mission of increasing value for shareholders. When they started running out of money, though, a couple of years later, they embedded a for-profit entity within this nonprofit structure so that they could capitalize on the commercial value of the products that the nonprofit was building. This is where the tension lied, between the incentives of a for-profit engine and the values and mission of a nonprofit board structure.

All of this can seem really new. OpenAI was building legitimately groundbreaking technologies, technologies that could transform our world. But I think the problem and the wider problem here is not a new one. This is where I was getting deja vu. Back in the early days of Web 2.0, there was also a huge amount of excitement over a new disruptive technology. In this case, the power of social media. In some ways, events like the Arab Spring were very similar to the emergence of ChatGPT, a seismic of event that demonstrated to broader society the power of an emerging technology.

Now I spent the last 15 years studying the emergence of social media, and in particular how we as societies can balance the immense benefits and upside of these technologies with also the clear downside risks as they emerged. I actually think we got a lot of that balance wrong. It's times like this when a new technology emerges that we need to think carefully about what lessons we can learn from the past. I want to highlight three.

First, we need to be really clear-eyed about who has power in the technological infrastructure we're deploying. In the case of OpenAI, it seems very clear that the profit incentives won over the more broader social mandate. Power is also, though, who controls infrastructure. In this case, Microsoft played a big role. They controlled the compute infrastructure, and they wielded this power to come out on top in this turmoil.

Second, we need to bring the public into this discussion. Ultimately, a technology will only be successful if it has legitimate citizen buy-in, if it has a social license. What are citizens supposed to think when they hear the very people building these technologies disagreeing over their consequences? Ilya Sutskever, for example, said just a month ago, "If you value intelligence over all human qualities, you're going to have a bad time," when talking about the future of AI. This kind of comment coming from the very people that are building the technologies is just exacerbating an already deep insecurity many people feel about the future. Citizens need to be allowed and be enabled and empowered to weigh into the conversation about the technologies that are being built on their behalf.

Finally, we simply need to get the governance right this time. We didn't last time. For over 20 years, we've largely left the social web unregulated, and it's had disastrous consequences. This means not being confused by technical or systemic complexity masking lobbying efforts. It means applying existing laws and regulations first ... In the case of AI, things like copyright, online safety rules, data privacy rules, competition policy ... before we get too bogged down in big, large-scale AI governance initiatives. We just can't let the perfect be the enemy of the good. We need to iterate, experiment, and countries need to learn from each other in how they step into this complex new world of AI governance.

Unfortunately, I worry we're repeating some of the same mistakes of the past. Once again, we're moving fast and we're breaking things. If the new board of OpenAI is any indication about how they're thinking about governance and how the AI world in general values and thinks about governance, there's even more to worry about. Three white men calling the shots at a tech company that could very well transform our world. We've been here before, and it doesn't end well. Our failure to adequately regulate social media had huge consequence. While the upside of AI is undeniable, it's looking like we're making many of the same mistakes, only this time the consequences could be even more dire.

I'm Taylor Owen, and thanks for watching.

Keep reading...Show less

More from GZERO AI

What we learned from a week of AI-generated cartoons

April 01, 2025

Nvidia delays could slow down China at a crucial time

April 01, 2025

North Korea preps new kamikaze drones

April 01, 2025

Apple faces false advertising lawsuit over AI promises

March 25, 2025

The Vatican wants to protect children from AI dangers

March 25, 2025

Europe hungers for faster chips

March 25, 2025

How DeepSeek changed China’s AI ambitions

March 25, 2025

Inside the fight to shape Trump’s AI policy

March 18, 2025

Europol warns of AI-powered organized crime

March 18, 2025

Europe’s biggest companies want to “Buy European”

March 18, 2025

Beijing calls for labeling of generative AI

March 18, 2025

The new AI threats from China

March 18, 2025

DeepSeek says no to outside investment — for now

March 11, 2025

Palantir delivers two key AI systems to the US Army

March 11, 2025

China announces a state-backed AI fund

March 11, 2025

Did Biden’s chip rules go too far?

March 04, 2025

China warns AI executives over US travel

March 04, 2025

Trump cuts come to the National Science Foundation

March 04, 2025

The first AI copyright win is here — but it’s limited in scope

February 25, 2025

Adobe’s Firefly is impressive and promises it’s copyright-safe

February 25, 2025

OpenAI digs up a Chinese surveillance tool

February 25, 2025

Trump plans firings at NIST, tasked with overseeing AI

February 25, 2025

Silicon Valley and Washington push back against Europe

February 25, 2025

France puts the AI in laissez-faire

February 18, 2025

Meta’s next AI goal: building robots

February 18, 2025

Intel’s suitors are swarming

February 18, 2025

South Korea halts downloads of DeepSeek

February 18, 2025

Elon Musk’s government takeover is powered by AI

February 11, 2025

First US DeepSeek ban could be on the horizon

February 11, 2025

France’s nuclear power supply to fuel AI

February 11, 2025

Christie’s plans its first AI art auction

February 11, 2025

Elon Musk wants to buy OpenAI

February 11, 2025

JD Vance preaches innovation above all

February 11, 2025

AI pioneers share prestigious engineering prize

February 04, 2025

Britain unveils new child deepfake law

February 04, 2025

OpenAI strikes a scientific partnership with US National Labs

February 04, 2025

Europe’s AI Act starts to take effect

February 04, 2025

Is DeepSeek the next US national security threat?

February 04, 2025

OpenAI launches ChatGPT Gov

January 28, 2025

An AI weapon detection system failed in Nashville

January 28, 2025

An Oscar for AI-enhanced films?

January 28, 2025

What DeepSeek means for the US-China AI war

January 28, 2025

What Stargate means for Donald Trump, OpenAI, and Silicon Valley

January 28, 2025

Trump throws out Biden’s AI executive order

January 21, 2025

Can the CIA’s AI chatbot get inside the minds of world leaders?

January 21, 2025

Doug Burgum’s coal-filled energy plan for AI

January 21, 2025

Day Two: The view for AI from Davos

January 21, 2025

Is the TikTok threat really about AI?

January 21, 2025

Biden wants AI development on federal land

January 14, 2025

Biden has one week left. His chip war with China isn’t done yet.

January 14, 2025

British PM wants sovereign AI

January 14, 2025

Automation is coming. Are you ready?

January 14, 2025

OpenAI offers its vision to Washington

January 14, 2025

Meta wants AI users — but maybe not like this

January 07, 2025

CES will be all about AI

January 07, 2025

Questions remain after sanctions on a Russian disinformation network

January 07, 2025

5 AI trends to watch in 2025

January 07, 2025

AI companies splash the cash around for Trump’s inauguration fund

December 17, 2024

Trump wades into the dockworkers dispute over automation

December 17, 2024

The world of AI in 2025

December 17, 2024

2024: The Year of AI

December 17, 2024

Microsoft gets OK to send chips to the UAE

December 10, 2024

Nvidia forges deals in American Southwest and Southeastern Asia

December 10, 2024

The AI military-industrial complex is here

December 10, 2024

Biden tightens China’s access to chips one last time

December 03, 2024

Intel is ready to move forward — without its CEO

December 03, 2024

Amazon is set to announce its newest AI model

December 03, 2024

Can OpenAI reach 1 billion users?

December 03, 2024

Will AI companies ever be profitable?

November 26, 2024

The US is thwarting Huawei’s chip ambitions

November 26, 2024

The AI energy crisis looms

November 26, 2024

Amazon’s grand chip plans

November 26, 2024

GZERO Series

GZERO Daily: our free newsletter about global politics

Keep up with what’s going on around the world - and why it matters.