Digital Governance

Should AI content be protected as free speech?

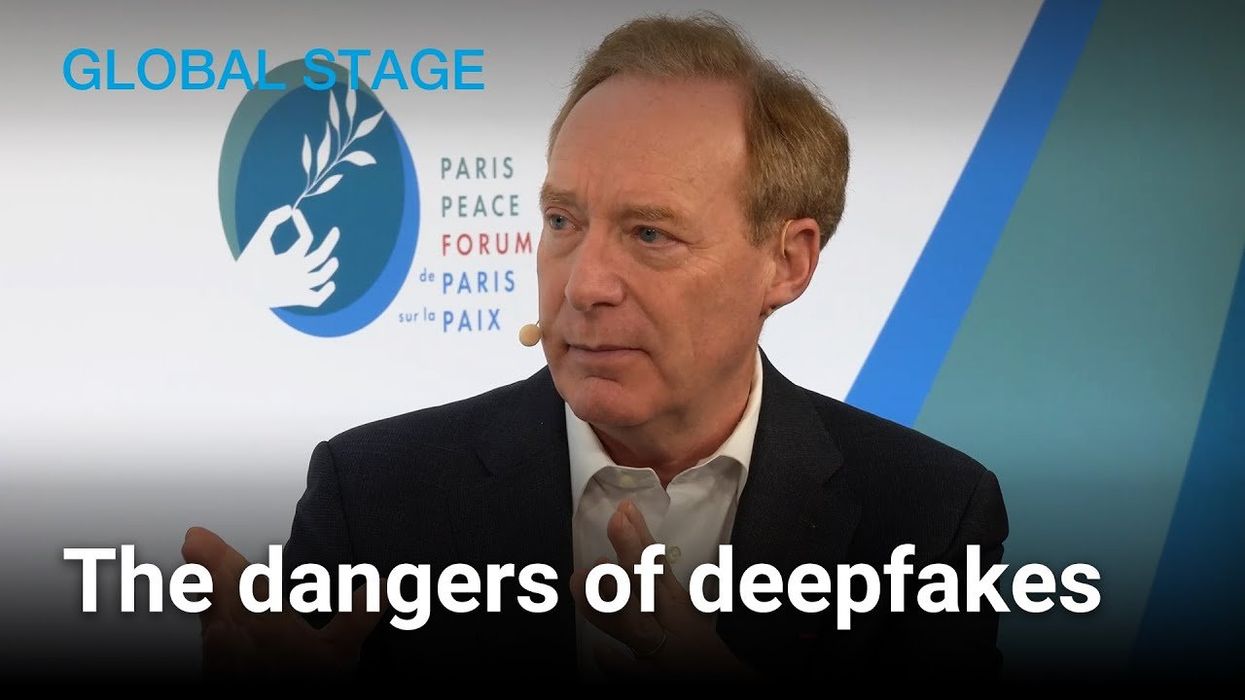

Where do misinformation, fabricated imagery, and audio generated by AI fit into free speech? Eléonore Caroit, vice president of the French Parliament’s Foreign Affairs Committee, weighs in on this tricky question at a GZERO Global Stage discussion live from the 2023 Paris Peace Forum.

Nov 10, 2023