Marietje Schaake, International Policy Fellow, Stanford Human-Centered Artificial Intelligence, and former European Parliamentarian, co-hosts GZERO AI, our new weekly video series intended to help you keep up and make sense of the latest news on the AI revolution. In this episode, she takes you behind the scenes of the first-ever UK AI Safety Summit.

Last week, the AI Summit took place, and I'm sure you've read all the headlines, but I thought it would be fun to also take you behind the scenes a little bit. So I arrived early in the morning of the day that the summit started, and everybody was made to go through security between 7 and 8 AM, so pretty early, and the program only started at 10:30. So what that led to was a longstanding reception over coffee where old friends and colleagues met, new people were introduced, and all participants from business, government, civil society, academia really started to mingle.

And maybe that was a part of the success of the summit, which then started with a formal opening with remarkably global representation. There had been some discussion about whether it was appropriate to invite the Chinese government, but indeed a Chinese minister, but also from India, from Nigeria, were there to underline that the challenges that governments have to deal with around artificial intelligence are a global one. And I think that that was an important symbol that the UK government sought to underline. Now, there was a little bit of surprise in the opening when Secretary Raimondo of the United States announced the US would also initiate an AI Safety Institute right after the UK government had announced its. And so it did make me wonder why not just work together globally? But I guess they each want their own institute.

And those were perhaps the more concrete, tangible outcomes of the conference. Other than that, it was more a statement to look into the risks of AI safety more. And ahead of the conference, there had been a lot of discussion about whether the UK government was taking a too-narrow focus on AI safety, whether they had been leaning towards the effective altruism, existential risk camp too much. But in practice, the program gave a lot of room for discussions, and I thought that was really important, about the known and current day risks that AI presents. For example, to civil rights, when we think about discrimination, or to human rights, when we think about the threats to democracy, from both disinformation that generative AI can put on steroids, but also the real question of how to govern it at all when companies have so much power, when there's such a lack of transparency. So civil society leaders that were worried that they were not sufficiently heard in the program will hopefully feel a little bit more reassured because I spoke to a wide variety of civil society representatives that were a key part of the participants among government, business, and academic leaders.

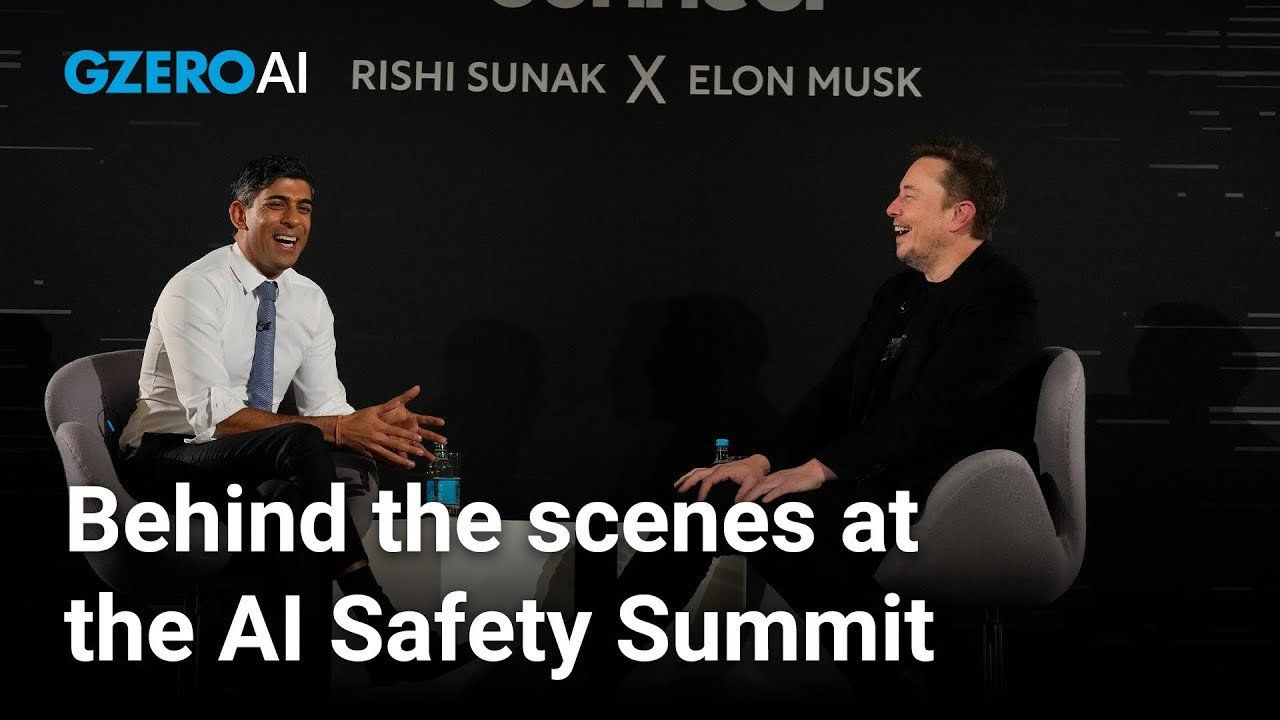

So, when I talked to some of the first generation of thinkers and researchers in the field of AI, for them it was a significant moment because never had they thought that they would be part of a summit next to government leaders. I mean, for a long time they were mostly in their labs researching AI, and suddenly here they were being listened to at the podium alongside government representatives. So in a way, they were a little bit starstruck, and I thought that was funny because it was probably the same the other way around, certainly for the Prime Minister, who really looked like a proud student when he was interviewing Elon Musk. And that was another surprising development, that actually briefly, after the press conference had taken place, so a moment to shine in the media with the outcomes of the summit, Prime Minister Sunak decided to spend the airtime and certainly the social media coverage interviewing Elon Musk, who then predicted that AI would eradicate lots and lots of jobs. And remarkably, that was a topic that barely got mentioned at the summit, so maybe it was a good thing that it got part of the discussion after all, albeit in an unusual way.

- Rishi Sunak's first-ever UK AI Safety Summit: What to expect ›

- Elon Musk's geopolitical clout grows as he meets Modi ›

- Everybody wants to regulate AI ›

- Governing AI Before It’s Too Late ›

- Be very scared of AI + social media in politics ›

- Is AI's "intelligence" an illusion? ›

- The geopolitics of AI ›

- AI's impact on jobs could lead to global unrest, warns AI expert Marietje Schaake - GZERO Media ›

- AI regulation means adapting old laws for new tech: Marietje Schaake - GZERO Media ›

- AI & human rights: Bridging a huge divide - GZERO Media ›

More For You

In this Quick Take, Ian Bremmer addresses the killing of Alex Pretti at a protest in Minneapolis, calling it “a tipping point” in America’s increasingly volatile politics.

Most Popular

Who decides the boundaries for artificial intelligence, and how do governments ensure public trust? Speaking at the 2026 World Economic Forum in Davos, Arancha González Laya, Dean of the Paris School of International Affairs and former Foreign Minister of Spain, emphasized the importance of clear regulations to maintain trust in technology.

Will AI change the balance of power in the world? At the 2026 World Economic Forum in Davos, Ian Bremmer addresses how artificial intelligence could redefine global politics, human behavior, and societal stability.

Ian Bremmer sits down with Finland’s President Alexander Stubb and the IMF’s Kristalina Georgieva on the sidelines of the World Economic Forum to discuss President Trump’s Greenland threats, the state of the global economy, and the future of the transatlantic relationship.