Analysis

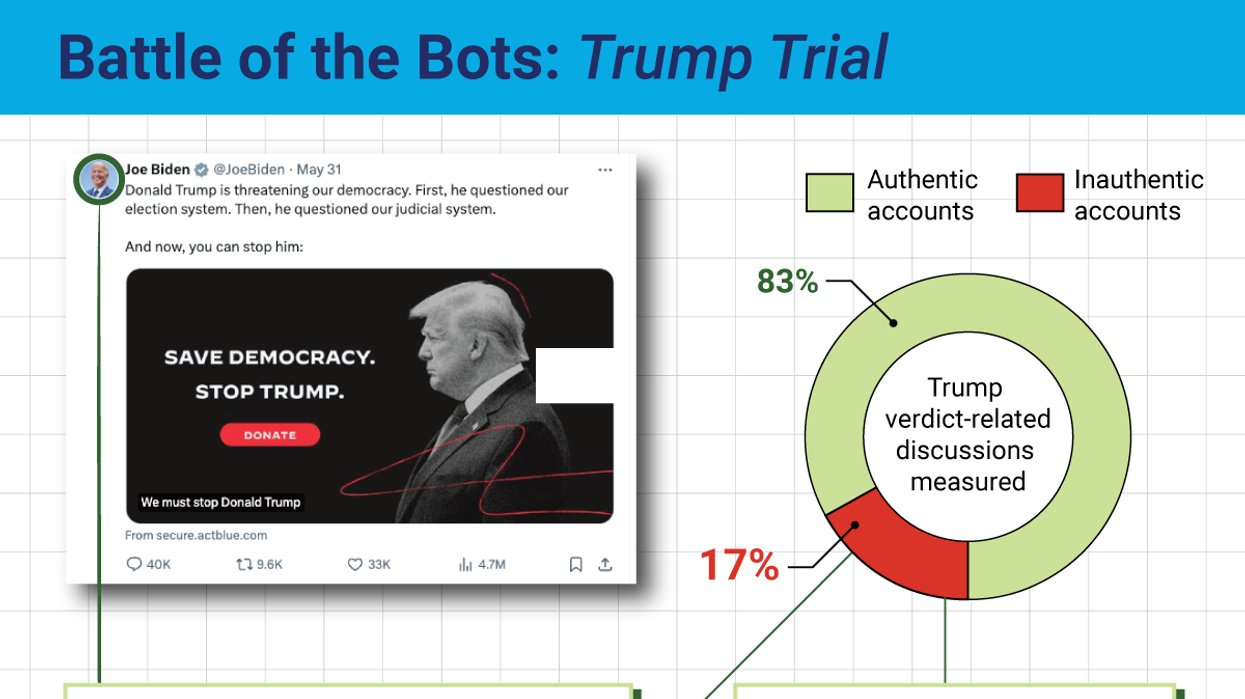

Battle of the bots: Trump trial

Talk about courting attention. Former President Donald Trump’s guilty verdict in his hush money trial on 34 felony counts captured the public’s imagination – some to rejoice, others to reject – and much of the debate played out on X, formerly known as Twitter.

Jun 19, 2024